In the many discussions and architecture approaches I’m reading about Micro-Services, a key point seems to elude the conversation. Namely, “What’s a Micro-Service?” Not what are the technical properties of a micro-service, but rather what level of BUSINESS functionality should be encapsulated in a single service, a single “micro” service?

One “formal” definition that’s floating around is this…

...an approach to developing a single application as a suite of small services, each running in its own process and communicating with lightweight mechanisms, often an HTTP resource API. These services are built around business capabilities and independently deployable by fully automated deployment machinery. There is a bare minimum of centralized management of these services, which may be written in different programming languages and use different data storage technologies. - James Lewis and Martin Fowler via http://techbeacon.com/

From the SOA (service oriented architecture) perspective, decomposing an application into it’s composite transactions and business objects, and wrapping them in accessible services, has been the trend for the past 15 years or so. Applications have been turning into business engines, and in some cases the interface layer itself has been successfully moved from having low level interaction with the business code to using transaction / object / service layers. Seeing an application or platform or suite that offers a full catalog of accessible transactions and objects (wrapped in services) has become the norm (and even at the code level, structuring the classes in a similar way).

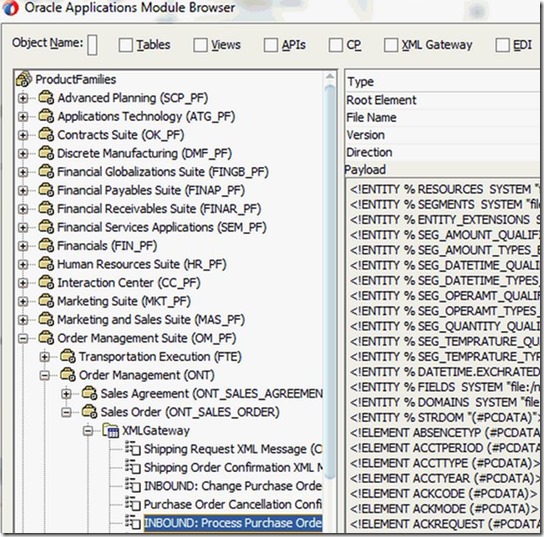

Example – Oracle e-Business Suite service catalog…

The micro-services architecture concept comes along and says “no no no, services should stand on their own”, to the technical point of being divorced from their implementation platform and container. But at what level of granularity should BUSINESS SERVICES be implemented? Can we build “virtual” applications that are logical composites of many “business micro services”, or do business transactions remain grouped as the combined code we refer to as “applications” and/or rolled up into “suites”?

Years ago I was giving a presentation describing how applications are decomposing, as they become integrated with and real-time linked to other applications and thereby becoming distributed applications – with a follow up discussion of integration reliability, monitoring, control, security, etc. The primary change of that generation was the application no longer standing on it’s own, even though that “it’s own” may have been data being imported or updated from other systems regularly. The distributed application moved data out of it’s own system and onto an integration connection point, with the advantage of making the data (or transaction) much more up-to-date – (semi) real time data and transaction movement.

But, my customers asked me, what if we are development NEW applications? My answer - we would want to bundle the functionality differently, to allow more flexible combinations.

• Break our “Application” model into…

− Transaction Components

− Process Components

− Entities

− all exposed as Services

• Compose, Coordinate, Combine them into…

− Business Processes

− User Presentations

− meaning “Applications”

At the time, this still presented signification issues of managing, controlling, tracking, and securing. API Management and Micro Services would seem (in theory, it’s early days) to resolve these issues. Which means we can get to a direct discussion of granularity of BUSINESS SERVICES, allowing for BUSINESS ORIENTED MICRO SERVICES.

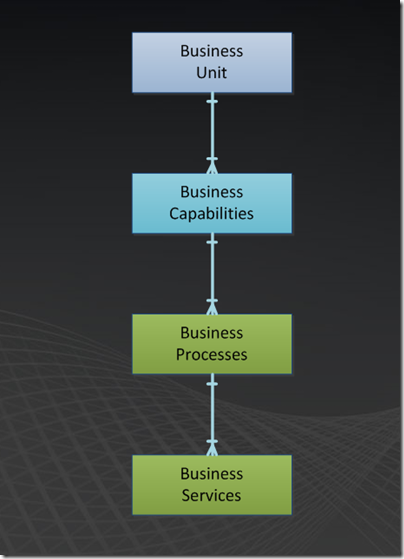

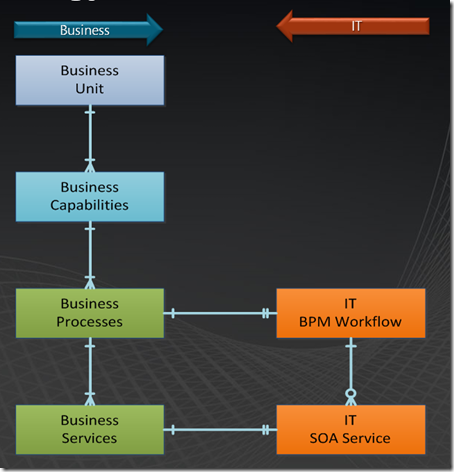

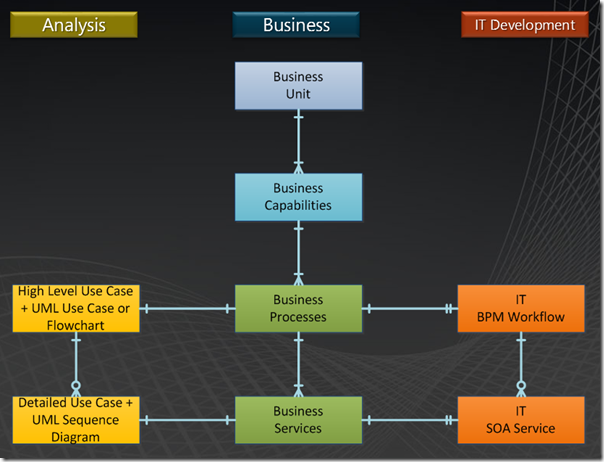

A Business Unit has one or many Business Capabilities. A Business Capability is a series of one or many Business Processes. And a Business Process has one or many steps, each being a Business Service. From the IT perspective…

The Businesses processes map to Workflows or Business Process Automation’s from the IT implementation perspective. In older applications workflow was encoded in the application itself. Nowadays if the Business Services are encapsulated and exposed, they can be orchestrated externally via BPM (business process management), BPA (business process automation) or other integration layer controllers.

The question is, of what granularity should those Business Services be, and therefore the corresponding IT Services? How “micro” should a “micro-service” be? Is “Invoice Management” with “Create Invoice / Query Invoice / Update Invoice / Pay Invoice” a (large) micro-service, or is “Invoice Object” a micro-service encapsulating only the persistence and structure of the invoice, and Create / Query / Update / Pay Invoice each being a separate micro-service? (With the connectivity overhead and external orchestration overhead). And would breaking the functionality down to that level of granularity offer a sufficient value to compensate for the added overhead?

The answer is unclear. The pure technology proponents would tell us to go full granularity, and not concern ourselves with the connectivity overhead – as that’s a container and implementation level problem – nor concern ourselves with the orchestration overhead, as that’s an analysis and mapping problem (with it’s own implementation overhead). The deploying, hosting, and tracking of all those services should also be “easily” handled and managed by the environment. We’ll see.

Martin Heller at TechBeacon writes, “If a service looks cohesive and deals only with a single concern, then it's probably small enough. If, on the other hand, you look at a service interface and see a number of different concerns being combined, perhaps it's a candidate for further decomposition. At the other end of the spectrum, if the service doesn't do enough to feel useful, perhaps you overdid the decomposition and need to combine it with a related service. It's very much like the game of "find the objects" that people played when designing object-oriented software 25 years ago, but now the objects are services, not classes.”

I believe this ties it nicely with the picture above. A “business service”, such as Invoice Management, with it’s functions of create / query / update / pay, composes nicely into an IT service…and therefore (in the new terminology) a “micro-service”. The only reason to decompose further would be IF there was a desire or ability to substitute the granular functionality from other developers / development teams / or application vendors. And while that’s conceptually feasible, managing service catalogs at that level is simply not (yet) reasonably viable.

What this says to me is that the ONLY difference between Micro-Services and the SOA Services of last year is the expectation that the corresponding code is sufficiently encapsulated to be independently deployable. And while that independent deployability offers some interesting theoretical advantages, those advantages bring major management and control issues that have not been doing so well in practice. Example – managing the service catalog. SOA Governance, particularly design time governance, has not been successful in the field. Few IT enterprises have gotten the ROI from the vendor offerings in this space. API Management seems to be changing that, but still the point is managing and coordinating hundreds to thousands of coordinating services is a daunting task.

I would advise stepping carefully into Micro-Services. Try a few small projects to understand the dynamics of the use pattern AND the management pattern. There are advantages to be had, but risks as well. It’s likely we’re seeing the future of software development. But the control structures and supporting architecture patterns are not yet in place. Tread carefully.