With best of breed, we might end up with one company’s ERP system and another company’s CRM system, a third company’s manufacturing system and a fourth company’s financials.

With historical integration patterns, the issue of data interchange between the systems was mostly handling by large scale data exports and imports, usually performed as batch processes at end of day (or end of week or end of month). These processes could be described as “dump all the (insert primary data type here, such as customers), then dump all the (day’s/week’s/month’s) transactions” followed by a specially written import program that read the foreign systems’ format and wrote the transactions (either directly into the database or processed through the exposed transaction API).

As these systems chained along the full business process, the latter systems in the chain might not be updated (assuming a daily batch) for 3 or 4 days. Further, other systems that came along needing the same data wouldn’t necessarily go the the primary source (let’s say the first system in the chain), they would go to the easiest access point for the data (whichever system had the easiest API to use or easiest database to access) to pull it.

But today in our best of breed scenario there is an expectation of real time feeds between the systems. The integrations are significantly harder as the systems have to be intricately connected, dealing with differences in data formats, connection protocols and paradigms, and transaction processing models.

The integration effort in the Best of Breed scenario has gone up significantly, and the success or failure of the primary systems is completely dependent on a successful reliable intricate integration!

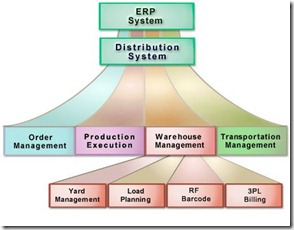

In the case of the Suite approach, each individual module (or major application) may not be the best in class solution. Some parts of the suite may be excellent, others average and some just barely acceptable. Yet, if the vendor has done their job well, the various modules are already well integrated. Not having to build, manage and maintain an integration layer among the suite components is the key advantage of the suite.

Does that mean I recommend the suite approach over the best of breed approach? Not necessarily. There are good counter arguments such as avoiding vendor lock-in and being able to take advantage of particular applications that offer exceptional abilities in their area.

So how does one deal with a best of breed approach, whether total or partial (say you use SAP for many things but still use a few other solutions)?

The answer is integration processes and standards. Building a well defined integration architecture layer that provides logical decoupling, as well as forcing your internal IT shop (and the vendors if possible) into XML industry standards is critical.

And what of SOA suites? Mixing and matching the integration tools is just as challenging as the applications. One can select, say, Software AG CentraSite for Design Time Governance / Services Catalog, IBM Datapower for security enforcement with SOA Software’s ServiceManager for runtime control and monitoring, and Oracle’s Fusion ESB. Technically that should all be possible and should work. On a practical basis, I don’t know of anyone who has succeeding in doing so. (More often one finds most tools from one vendor and perhaps one component from another, and the IT shop dealing with the extra work of the particular bridge between that one integration point.)

I find it interesting that the proliferation of open standards and easy integration is driving us back to suites. Not because we can’t connect everything but because it’s simply not worth the effort to do so.

Of course, those trying to do so is what’s keeping my work schedule completely full. Hmm, maybe I shouldn’t have written this article.