Encryption

Every web service call should be SSL encrypted (HTTP/S). Similarly, any web service operation or file transfer sent via FTP should utilize Secure-FTP (S/FTP). Basic level security requires that the receiving source have a valid signed certificate, but it does not require dual-side certificates or any validation of the certificate beyond it being a valid source on a valid root chain. The objective of this requirement is encryption, not authentication.

Now encryption will frequently be argued against. “It’s high overhead, slows things down too much.” and “Setting up security on every server is time consuming.”

First, we must layer our security. By exposing services we’re transmitting internal application data outside the security perimeter of the application! This data must be protected from view, and dropping a network sniffer onto a developer’s workstation or a development or test server is a trivial exercise. So even if there’s some overhead, we must provide some protection. Second, today’s server CPU’s will process SSL with little overhead (3 to 8%), and even less if there’s a Datapower in the loop (as it’s optimized for it). As far as the setup time, the setup overhead is brief and a one time cost (per server).

Basic Service Security.

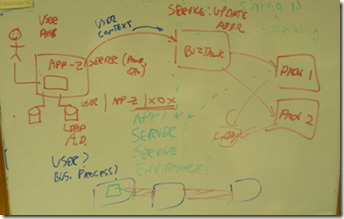

Ever service should be required to include:

a. The Requesting Application

b. The Requesting Server (IP)

c. The Requesting Environment (version, platform)

d. The User Context (what user in the requesting App activated a function needing this service)

e. Authorization ID+Password

-- i. For this service or just from that application?

-- ii. Different per environment (Dev, Test, Production)

Now depending on whether you’re handling this manually or not, some of this information will be included automatically. Note we’re not discussing HOW this information is included here. (For example SAML for the authorization ID/password, and/or WS-Security for some of the other information.) Rather, what should be included.

Security control will differ by organization and maturity of the SOA implementation. But here’s what needs to be known…

- The Requesting Application. The simplest level of access control is to specify that the exposed service can be used by specific other applications. For example, the Customer Service application can call the Get Bill service of the Billing System.

- The Requesting Server. We want to make sure that not only is the service being called from an application that is allowed to do so, but from an instance of the application that’s allowed to do so. A simple way to do this is by checking the source IP of the request. Yes, this is WEAK and easily spoofed (meaning it’s not difficult to fake a source IP on a TCP/IP request). But, it’s simple and better than nothing. (Note it’s included in the network packet already, so it doesn’t need to be added, just checked.)

- The Requesting Environment. Cross-connecting between development or test and production is a not infrequent SOA mistake. The security should protect against this, but we must give it enough information to do so.

- The User Context. Some environments have a federated identity model in place. This may be as simple as Microsoft’s Active Directory or as complex as Tivoli’s Identity Management for managing identity across multiple technology platforms (such as Windows to Unix to Mainframe). If so, this feature may not need to be part of the SOA security or rather just pass a token from the identity environment. In either case the idea is that the user context in the application requesting use of a service should be passed to the application(s) exposing the service(s) for auditing purposes (logging who requested this action) and for security purposes (are they authorized to request this action). This can be challenging in an environment without identity management as when we connect the applications a complete new user based from the requesting application may require authorization entries into the providing application’s security controls. Therefore many organizations ignore this area. This is a mistake…even if not used for actual authorization the data should be logged for auditing purposes.